Belle Époque, Esperanto and racism

This is a weird post. It tries to connect certain ideas through history, culture and politics. Not sure it manages to convince, but also not aware of the weak points.

The Belle Époque

To deeply understand Esperanto, start from the Belle Époque. It was "a period characterised by optimism, enlightenment, regional peace, economic prosperity, colonial expansion, and technological, scientific, and cultural innovations. In this era (...) the arts markedly flourished, and numerous masterpieces of literature, music, theatre and visual art gained extensive recognition".

The conquest of Africa by European nations certainly makes the époque much less belle. But we are not here to say anything is perfect; we are here to compare this period with the absolute disaster that followed: the two world wars.

In classical music (the thing I know and love), the peaceful period is definitely THE time of complex masterpieces and expansion of language. Think Debussy, Stravinsky, Mahler, Richard Strauss, Ravel, Rachmaninoff... In my opinion this was the ultimate climax of the art of music.

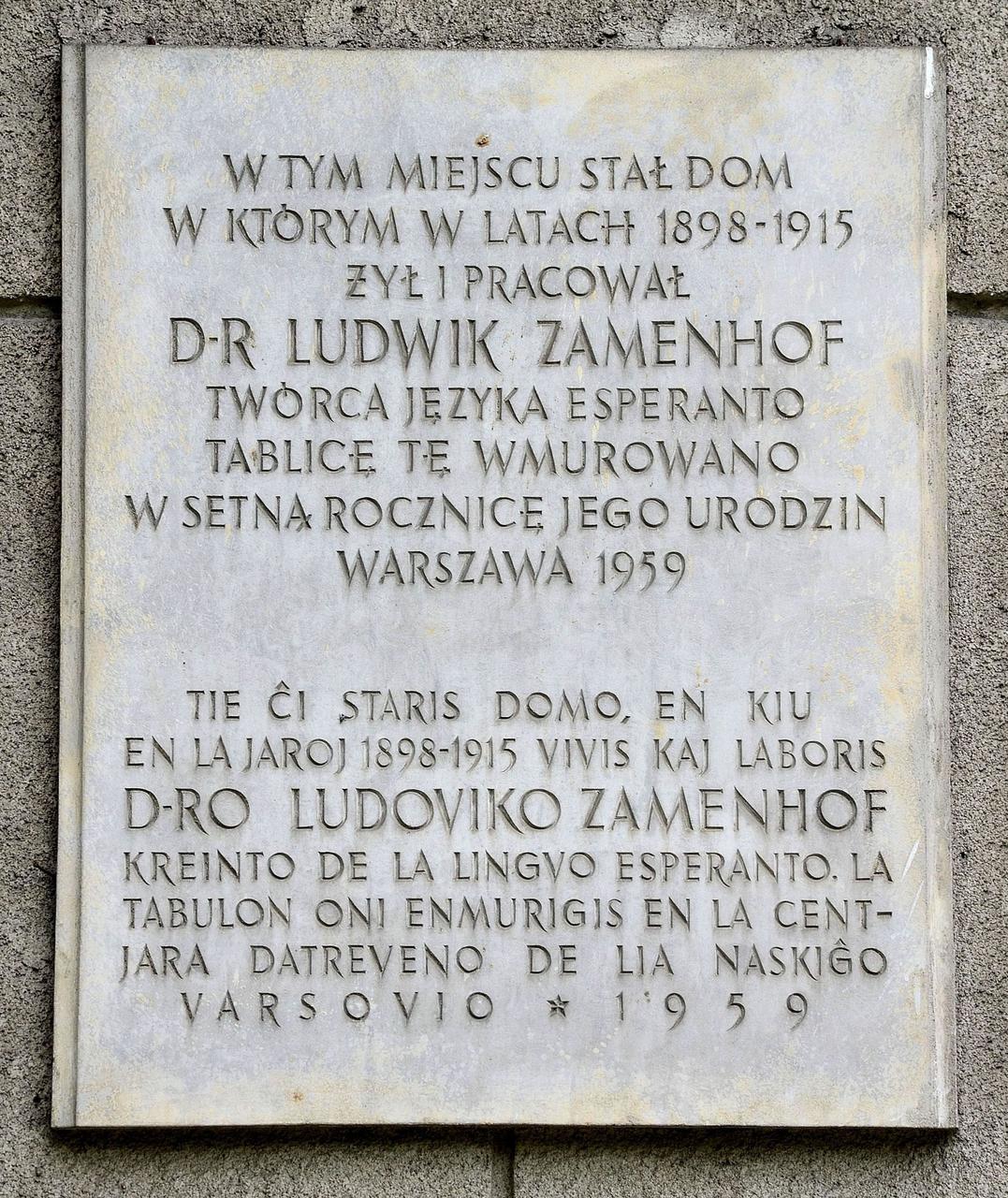

In technology, that is the time of the invention of the automobile, the metro, the telegraph, steel, the railroad... all products of the Second Industrial Revolution. Esperanto was created early in the Belle Époque, giving it a chance to help feed the optimism.

What Esperanto is

Esperanto is simultaneously:

- not only a designed language;

- but also a masterpiece of literature, in its design choices that aren't absolutely perfect but still work so well that no improvement proposal, however reasonable, was enough to sway the community;

- achieved through a scientific breakthrough: the understanding of what parts of grammar are useful (kept) and which are simply inconsequential rules (dropped);

- amounting to...

- a new technology: a language so easy that it can be learned in only 200 hours of study;

- an economic breakthrough: achieving easy, precise communication after much less investment (of hours of language study and therefore money);

- and a cultural innovation: the idea of a language that actually deserves to be everyone's second language.

Esperanto is the only technology from Star Trek already available today (Federation Standard). Learning languages is not a skill or propensity that every human has – it is only one of many intelligences. Tomorrow every child will need to spend 2500 hours learning Mandarin. If the world were coordinated, they could instead spend only 200 hours learning Esperanto.

What Esperanto means

There is no great achievement without optimism. Like Star Trek and the Belle Époque, Esperanto expresses optimism:

- optimism about the power of reason

- optimism that truth can come to light (be expressed)

- optimism that human relations will improve

- optimism that peace will be attained and be the default

- optimism that racism shall become a thing of the past, a forgotten problem

- optimism that humanism will have priority over nationalism

The enemy: nationalism

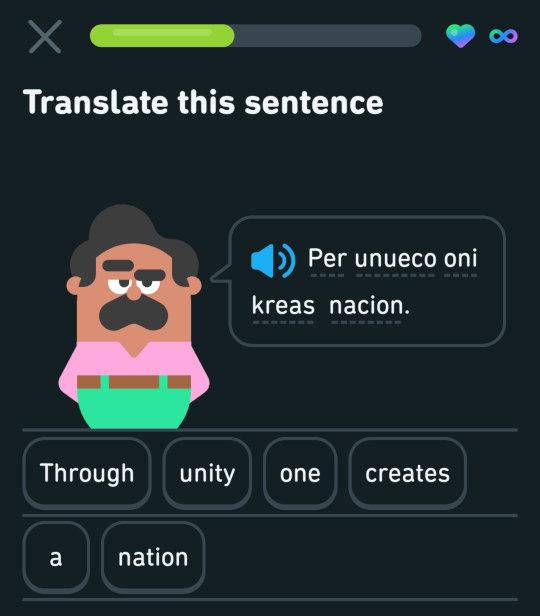

Weird nationalist content in the Esperanto course of Duolingo

This last item is unfortunately the least well understood. Nationalism continues to be an enormous force in the world and people have not generally recognized it as the enemy it is – a menace to each item in the above list. I have seen more than a few nationalists among Esperantists. In fact, they think of Esperantujo itself as a sort of nation...

What could be more strange, more presumptuous, than saying "Nice to meet you, samideano!" (The word means "fellow with the same ideas".)

Do you see nothing strange in a Youth Congress that has everyone sing a little Esperanto hymn at the beginning? Must we confirm the general suspicion that Esperanto is a cult?

Nationalism is the illusion that some brothers are family and others aren't. Nationalism is manipulation enough to make you join a war that is not in your interest, it is only in the interest of the powerful. Nationalism is to feel more pride and connection in your immediate family than in the accomplishments of distant people, which ultimately is not a wise discrimination, even if considered natural.

I will give you an example. These days I am seeing a stupid idea repeated more and more: That white people never had a single creative moment, that they can only steal the creativity of other nations, present those as their own, profit from them and enslave other groups. Whites are just parasites. This idea loses perspective of all cultural complexity and explains everything based on the absolutely stupid criterium of skin color. It is ethno-nationalist, and therefore, wrong.

Racism is a nationalism

One day I thought to myself, "today I will leave Schumann alone and get way out of my comfort zone. I will learn a bit about the history of hip hop, which I never listen to". So I found a video on that theme.

But suddenly I caught the black historian of rap stating that white people have an inner void of sadness which can only be filled with the happiness of black music. According to him, this is why white people love black music and keep stealing it. This is of course exemplified with well-known facts of the industry of rock and pop music in the 20th century. For instance, Elvis Presley grew among black musicians and delivered black music to a racist establishment that wouldn't allow black musicians to rise.

As a white man who always loved classical music, the fact that the preacher would see me as inferior, uncreative and immoral a priori, based on the color of my skin... is quite offensive, of course. So when I heard that, first I had a stupid reaction of spite: I thought "keep your hip hop, dude; I will keep my Grieg".

But that is a dumb thought. It falls into these traps:

- Accepting the racial categories – in fact, accepting them as the most important parameter.

- Feeding into the racial war proposed by the mistaken historian brother.

- Throwing away the baby (hip hop) with the dirty bath water (the ridiculous theory).

But culture is much more complex, isn't it. There are many cultures simultaneously at play, and honestly blackness isn't a legitimate musical parameter, it is quite imaginary. (This point will become clear gradually.)

The intelligent thought is to remember that culture feeds on itself and builds on top of itself, and should be free to do so; that culture is for all people; that Bach is for everyone as much as hip hop is for everyone; so the bro's way of thinking is contrary to the way culture really works, contrary to inclusivity, and just straight racist, inasmuch as it pre-judges a set of people, discriminating by their skin color.

The correct thought is exemplified by Heitor Villa-Lobos, a great Brazilian composer, born in 1887, the same year Esperanto was first published. Villa-Lobos composed a famous series of works titled "Bachianas Brasileiras", in which we find traits of Bach's music miscigenated with things typical of Brazilian folkloric music. In so doing, he is implicitly showing, that the smart thing to do, is yes to import that which is good, and Bach is sooo good, so universally considered the best composer that ever lived, that there's no doubt that Bach should converted into a Brazilian thing. The immortal German should become one of the components of the mestiço country, for sure.

The smart thing to do in culture is import good things and ignore bad things. That is what classical music has always done – building on top of itself and folkloric musics. To be clear, cultural "appropriation" is the wrong diagnostic. The problem was systemic racism that prevented the originals from being as successful and rich as they deserved to be. The problem is racism, not appropriation. Appropriation is the correct, normal, endemic modus operandi of culture.

In other words, Elvis Presley was never wrong to sing in the genre that he loved. It would have been absurd to shout him down: "hey Elvis, stop that, that belongs to others!" Based on skin color!!! You think the music didn't belong to Elvis because of the color of his skin??? Preposterous!!! Can you imagine such a thing??? The racism was wrong – Elvis was right, he wasn't racist! The racism prevented black musicians from having all the success they deserved. Elvis had nothing to do with that! Elvis is guilty of no sin, let the man sing!!!

The thought of the historian is completely ignorant of how culture works. We are all exposed to it. Although I would prefer Ravel, I hear bits of hip hop constantly, because I live in the world. Conversely, he is deluded if he thinks he was never influenced by Tchaikovsky. Everyone has heard Tchaikovsky whether they wanted to or not. We are all exposed to the culture, it influences us, there is no escape.

He talks as if the software used to create hip hop weren't full of the work of European engineers...

His thought tends towards mirroring the identitarian movement. That's nothing to be proud of. This is what happens when minds get contaminated with nationalisms, any kind of them.

Recognize this moment is anti-enlightenment

Notice how much humanity accomplished during the Belle Époque; realize how many great dreams arose in that period; calculate what a waste and what shattering of those dreams the 2 World Wars represented – wars that wouldn't be possible in the absence of the formidable power of nationalism. Think of how much further along civilization might be if the wars somehow hadn't occurred...

"(...) help people get used to the idea that each one of them should see their neighbors only as a human being and a brother."

And then think about the present moment. Think about the polarization, the extremism found in all political sides... When was a Zamenhof more needed?

I'll tell you what I think:

We must seek to be centered, subtle and rational in our expressed views.

Ideologies are good while subtle, well written and well read. Ideologies become bad in the messy behavior of the masses, which ends up directly contradicting the books that propelled them. So reject theories that:

- cynically negate rationality;

- cynically affirm the relativism and subjectivity of truth(s), declaring objective truth unreachable in principle;

- cynically affirm that human "races" are forever in opposition;

- manipulate people, striving to consolidate a new nation through the old technique of pointing to a supposed common enemy guilty of all problems, while defending the value of inclusivity.